Amid the intrepidly disjointed cacophony of strident disillusionment conjured by the click bait avatars of industrialized politicization, the whispers of innovation, though largely drowned out, still exist. While the majority of society gathers to quench a pretended thirst at the communal watering hole prompting divisiveness, burgeoning technology is being molded to fit the curious and spooky confines of the quantum universe through a quaint and pristine aquifer obscured by the prevalence of failing attention spans. In rare moments of collective lucidity, an idea or concept that is abrasively incongruent to the 15-second news cycle, but genuinely enlightening is worth the time and the effort to address.

With the classic underlying model of a computer being strained due to reality of information overload, a specific and disciplined community of scientists and engineers are exploring ways to expand the current speed limit of computations set by classical physics. The cutting-edge undertaking is a bold stab into the world of experimentation, considering the questionable validity of the climate debate, and the unwillingness of the tech world to assume any responsibility or scrutiny for the heaping mass of energy required in shouldering the insatiable demands of keeping the internet operational and accounting for the storage requirements of an unfathomable wealth of data. Professional hypocrisy aside, the world is starved for a narrative that does not involve a blow-by-blow social media outburst, or the insufferable broken record talking points of bots and algorithms from Russia and North Korea interfering with an election three years into the past. Quantum computing may never be a water cooler staple of interoffice camaraderie, but recent developments and a possible breakthrough in the valuable technology are far more intriguing than news of dejected Twitter users craving hugs and juice in the aftermath of dueling anonymous hissyfits mocking the progression of the real world.

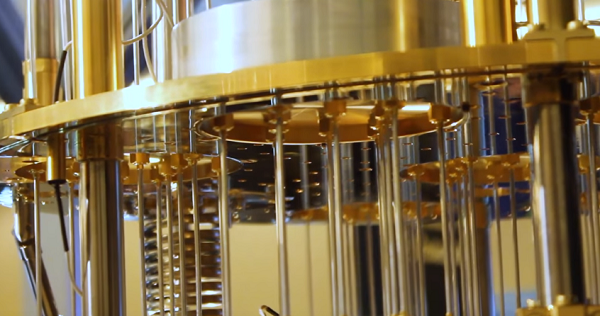

Currently, the continuously evolving dinosaur, IBM, boasts the Rolls Royce of quantum computers, that if ever fully operational, will allow for a seemingly limitless magnitude of processing power. The 50 qubit or “quantum bits” machine, or is not based on the simple binary model of the traditional computer, and offers the existence of two numbers storing information at the same time, instead of just one. In binary, information is manifested as a bit, either existing as a singular state with the value of a “0”, or a “1”. The quantum computer opens up the exponential magic of mathematics, by doubling the base processing power. Thus, a single qubit is 2 to first power, and 50 qubits results in a daunting 20 digit number, while 50 bits, is simply 50 bits. It all has to do with the linear spin of a particle. The end game allows for interactions with a resolution compatible with the molecular scale, however, the prevalence of practical issues is hindering the IBM quantum computer from operating at full capacity. Enter researchers from MIT and Dartmouth.

As the efficiency of quantum computers is detrimentally effected by “background noise”, reports MIT news, scientists are developing a sensor that detects potentially harmful interference in achieving an optimal operating standard. The detecting device will evaluate extemporaneous sources of possible disturbances, including energy displacement, disruptive wireless frequencies, and impurities within the materials of actual component solid state housing, hopefully giving scientists a playbook to stabilize and harness a powerful upgrade to contemporary computing. The art of engineering the precarious quantum state of “superpositions”, where a particle can exist in multiple places at one time in the planar universe, has limitless applications in scientific and technological fields and creates shortcuts in reducing the time spent in solving complex equations from years to days. Maybe quantum bits, can help to eliminate the annoying presence of social media bots.

An insightful quote by mathematician Jonathan Hui captures the excitement and game changing possibilities of the technology in a chilling an eloquent fashion, “Increasing the qubits linearly, we expand the information capacity exponentially.”

The question remains, is the apparent upgrade to the quantum system and particle physics more economically feasible than the current framework of servers and computers, and does the energy requirements needed to maintain the plausible infrastructure justify a complete restructuring of a workable and reliable template for the information superhighway? Ironically, the frenzied height of the bit coin mining craze strained the limited power grids of many second and third world countries, where entrepreneurs took advantage of miniscule costs to profit from the hysteria.

While the next steps for quantum computing will involve the stabilization of superspositions, concerns surrounding the allocation of finite energy resources is an crucial and ongoing discussion in ensuring that the necessary steps are taken to ensure redundancy.

Read the MIT article here.